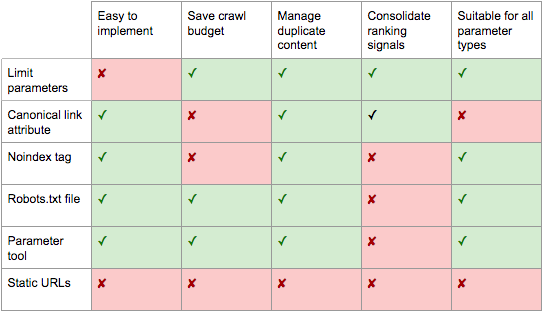

Advanced robots.txt strategies for managing dynamic URLs and parameters focus on controlling crawler access to avoid duplicate content, preserve link equity, and improve crawl efficiency.

Key strategies include:

-

Parameter Handling via Robots.txt and Google Search Console: Use Google Search Console’s URL Parameters tool to specify how crawlers should treat URL parameters, distinguishing between those that change content and those that don’t. Robots.txt can disallow crawling of URL patterns with unnecessary parameters to reduce duplicate crawling but should be used carefully to avoid blocking important content.

-

Canonicalization: Implement canonical tags on dynamic pages to point search engines to the preferred URL version. This consolidates ranking signals and prevents dilution of link equity caused by multiple URL variants.

-

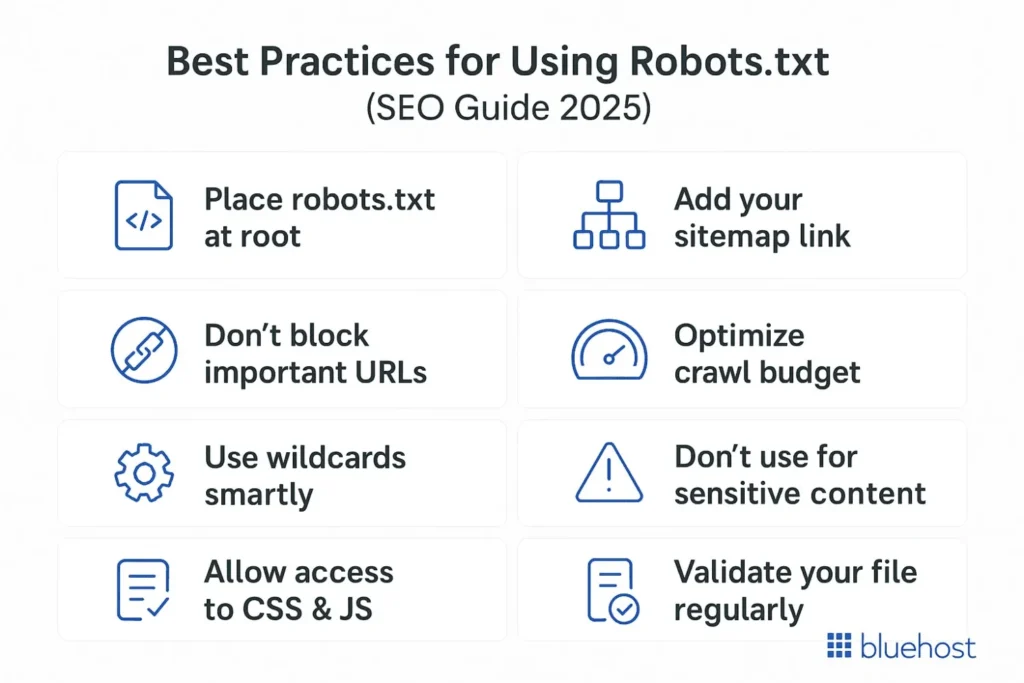

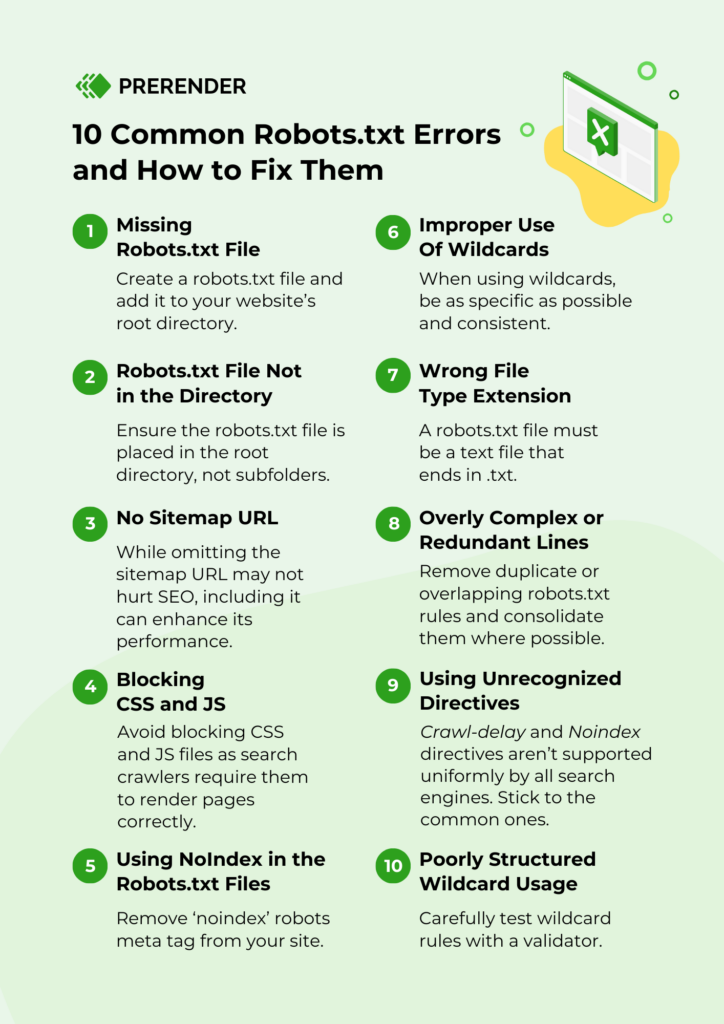

Selective Blocking with Robots.txt: Use relative paths in robots.txt to disallow crawling of specific dynamic URL patterns or directories that generate duplicate or low-value content (e.g., session IDs, tracking parameters). Avoid blocking critical resources like JavaScript and CSS to maintain site functionality.

-

Sitemap Inclusion: Reference your XML sitemap(s) in the robots.txt file using absolute URLs. This helps search engines discover the main content URLs you want indexed, especially when dynamic URLs are excluded from crawling.

-

Avoid Deprecated Directives: Do not use

noindexorcrawl-delayin robots.txt as Google no longer supports these. Instead, use meta robots tags (<meta name="robots" content="noindex">) on pages you want excluded from indexing. -

URL Rewriting: Where possible, rewrite dynamic URLs into clean, static-looking URLs to improve crawlability and user experience, reducing reliance on robots.txt for blocking parameterized URLs.

In summary, the best practice is to combine robots.txt disallow rules for irrelevant or duplicate dynamic URL patterns, canonical tags for consolidating duplicates, and Google Search Console parameter settings to guide crawlers effectively without losing link equity or blocking valuable content. Always test robots.txt rules to ensure critical pages remain crawlable and indexable.

Ang PH Ranking ay nag-aalok ng pinakamataas na kalidad ng mga serbisyo sa website traffic sa Pilipinas. Nagbibigay kami ng iba’t ibang uri ng serbisyo sa trapiko para sa aming mga kliyente, kabilang ang website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, at TikTok traffic. Ang aming website ay may 100% kasiyahan ng customer, kaya maaari kang bumili ng malaking dami ng SEO traffic online nang may kumpiyansa. Sa halagang 720 PHP bawat buwan, maaari mong agad pataasin ang trapiko sa website, pagandahin ang SEO performance, at pataasin ang iyong mga benta!

Nahihirapan bang pumili ng traffic package? Makipag-ugnayan sa amin, at tutulungan ka ng aming staff.

Libreng Konsultasyon