ChatGPT works based on the Generative Pre-trained Transformer (GPT) architecture, which is a type of neural network designed to process and generate human-like text using the Transformer architecture. Here is a detailed explanation of how it works:

-

Input Processing

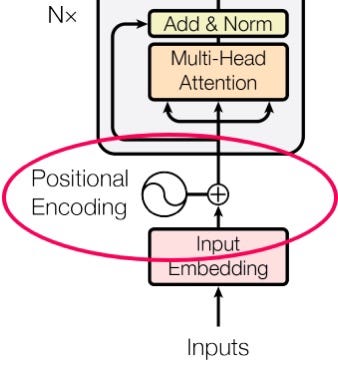

The input text is first broken down into smaller units called tokens. These tokens are converted into dense numerical vectors known as embeddings, which represent the semantic meaning of the tokens. To preserve the order of words, positional encodings are added to these embeddings. -

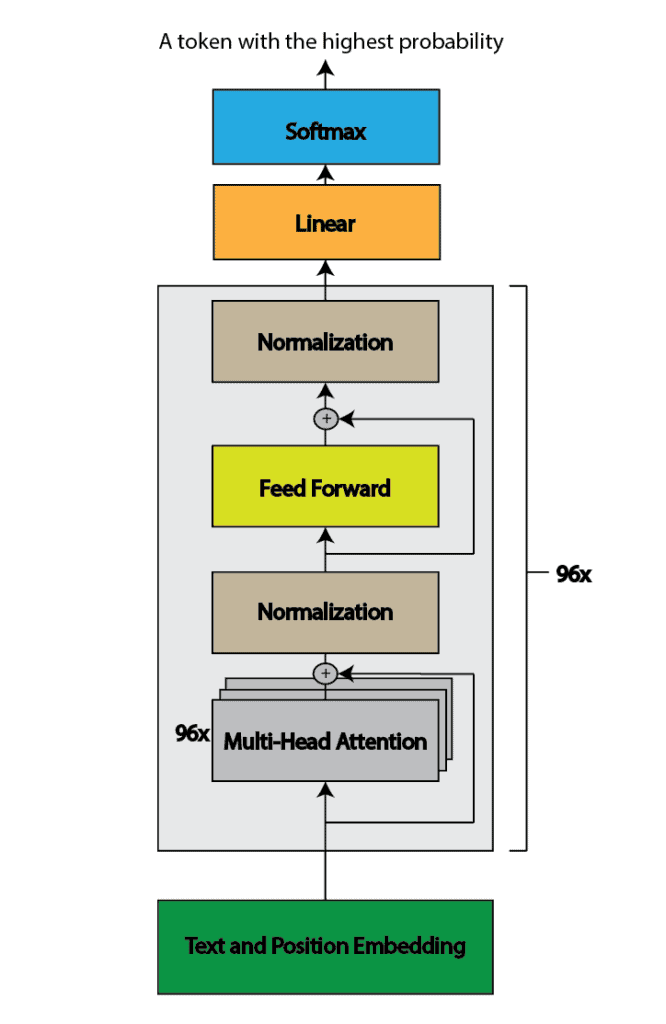

Transformer Layers

ChatGPT consists of multiple stacked transformer layers, each containing two key components:- Self-Attention Mechanism: This allows each token to "attend" to every other token in the input sequence, helping the model understand the context and relationships between words regardless of their position.

- Feed-Forward Networks: These apply nonlinear transformations to the attended information, enabling the model to learn complex language patterns.

-

Attention Mechanism Details

The self-attention mechanism uses three matrices derived from the input embeddings: Query, Key, and Value. The model computes attention scores by taking the dot product of the Query and Key matrices, scaling the result, and applying a softmax function to get attention weights. Multiple attention heads run in parallel (multi-head attention), allowing the model to focus on different parts of the input simultaneously, improving its understanding of context. -

Output Generation

After processing through the transformer layers, the model generates output tokens one by one. It predicts the next token by applying a softmax layer over the vocabulary, selecting the most probable next word based on the context learned so far. This step-by-step generation continues until the response is complete. -

Training and Fine-Tuning

ChatGPT is first pre-trained on vast amounts of text data using unsupervised learning to predict the next word in sentences. It is then fine-tuned on specific datasets with human feedback to improve response quality and relevance.

In summary, ChatGPT leverages the Transformer architecture's ability to model long-range dependencies and context through self-attention, enabling it to generate coherent, contextually relevant, and human-like text responses based on the input it receives.

Ang PH Ranking ay nag-aalok ng pinakamataas na kalidad ng mga serbisyo sa website traffic sa Pilipinas. Nagbibigay kami ng iba’t ibang uri ng serbisyo sa trapiko para sa aming mga kliyente, kabilang ang website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, at TikTok traffic. Ang aming website ay may 100% kasiyahan ng customer, kaya maaari kang bumili ng malaking dami ng SEO traffic online nang may kumpiyansa. Sa halagang 720 PHP bawat buwan, maaari mong agad pataasin ang trapiko sa website, pagandahin ang SEO performance, at pataasin ang iyong mga benta!

Nahihirapan bang pumili ng traffic package? Makipag-ugnayan sa amin, at tutulungan ka ng aming staff.

Libreng Konsultasyon