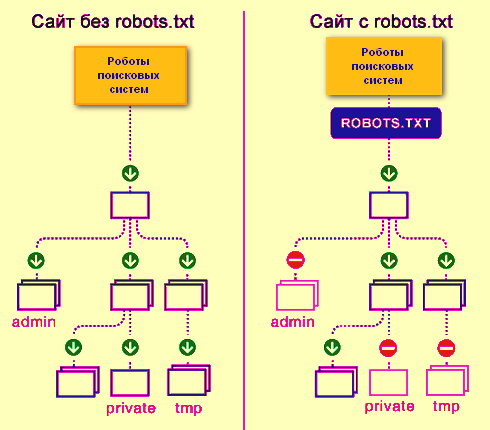

A robots.txt file is a plain text file placed in a website’s root directory that instructs web crawlers (user-agents) which parts of the site they can or cannot access. Its syntax consists of one or more groups of directives, each starting with a User-agent line specifying the target crawler, followed by rules like Disallow and Allow that control crawling permissions.

Key Syntax Elements and Directives

-

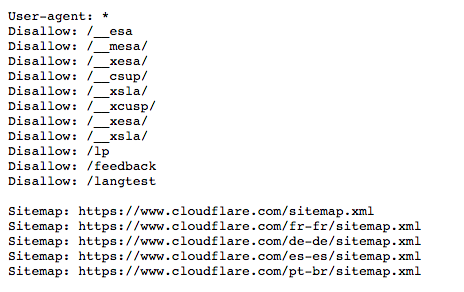

User-agent: Specifies the name of the crawler the rules apply to. Use an asterisk (

*) to target all crawlers. Each group begins with this line. -

Disallow: Defines URL paths that the specified crawler is not allowed to access. An empty value means no restrictions (full access).

-

Allow: Specifies URL paths that are allowed to be crawled, even if a broader disallow rule exists for a parent directory.

-

Sitemap: (Optional) Provides the URL of the sitemap for the site.

Format Rules

-

Each directive is written as

<field>: <value>, where the field is case-insensitive but the value (URL path) is case-sensitive. -

Comments start with

#and are ignored by crawlers. -

Multiple groups can exist for different user-agents; crawlers use the first matching group.

-

Paths in Disallow and Allow are relative to the root of the website.

-

Spaces around the colon are optional but recommended for readability.

Example of a robots.txt file

User-agent: *

Disallow: /private/

Allow: /private/public-info.html

User-agent: Googlebot

Disallow:

User-agent: Bingbot

Disallow: /not-for-bing/

Sitemap: http://www.example.com/sitemap.xml

This example blocks all crawlers from /private/ except for a specific allowed page, allows Googlebot full access, restricts Bingbot from a specific directory, and specifies the sitemap location.

Common Syntax Errors to Avoid

-

Missing or malformed User-agent lines.

-

Incorrect path formats in Disallow or Allow directives.

-

Improper comment usage (comments must start with

#). -

Case sensitivity mistakes in URL paths.

Correcting these ensures search engines properly interpret your rules and crawl your site as intended.

In summary, the robots.txt file uses a simple but strict syntax of user-agent blocks and allow/disallow rules to guide crawler behavior, helping manage site indexing and server load effectively.

Ang PH Ranking ay nag-aalok ng pinakamataas na kalidad ng mga serbisyo sa website traffic sa Pilipinas. Nagbibigay kami ng iba’t ibang uri ng serbisyo sa trapiko para sa aming mga kliyente, kabilang ang website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, at TikTok traffic. Ang aming website ay may 100% kasiyahan ng customer, kaya maaari kang bumili ng malaking dami ng SEO traffic online nang may kumpiyansa. Sa halagang 720 PHP bawat buwan, maaari mong agad pataasin ang trapiko sa website, pagandahin ang SEO performance, at pataasin ang iyong mga benta!

Nahihirapan bang pumili ng traffic package? Makipag-ugnayan sa amin, at tutulungan ka ng aming staff.

Libreng Konsultasyon